In the post-COVID world, it has never been more important to choose the right locations to open or close stores to maximize sales or minimize losses. In this article, we discuss how retailers can implement quick and easy to use solutions that empower decision-makers to make the optimal choices regarding their store network.

Although the role of the brick & mortar store is transforming, it still remains at the heart of a retailer’s strategy to showcase the brand and nurture a strong relationship with the customer. It is also the channel where the majority of sales take place for most retail sectors, and most notably in the restaurant & cafe sector. The geographic coverage and choice of location for a new store or restaurant is, therefore, key to the growth strategy of retailers in the pursuit of optimizing return on investments whilst also supporting sales growth.

The application of data science to support these critical decisions promises huge gains and yet few retailers take full advantage of its potential today. Our experts have already helped several clients develop a data science solution which instantaneously predicts the revenue potential of a future store based on its location. The tool is not only industrialized, but it is also customizable and can be placed in the hands of decision-makers in less than three months.

Nowadays, retailers are able to collect huge amounts of transactional data from their stores. Although this type of data is no doubt valuable, it is not sufficient on its own to create a robust prediction model for future store openings.

Being able to enhance a model through qualitative information and external (sometimes unstructured) data sources is thus a major challenge to ensure the success of such projects. Some examples include the number of parking spots or road access, both of which can be among the most important drivers of a store’s performance. Unfortunately, not all retailers can boast sufficient data maturity to provide such information in a simple and reliable way. There can be several reasons for this:

These hurdles pale in comparison to the losses of making the wrong choice and should not stand in the way of retailers enhancing their decision-making processes. Opening a new store requires substantial investment which has to be profitable and decision-makers have long been crying out for a reliable data-driven solution to help them make the right strategic choices.

We see many retailers resorting to tech-first, off-the-shelf turnkey solutions out of habit rather than by choice. As one of our clients in the retail banking sector explained, software-driven “one size fits all” solutions often fail to factor in the data challenges and specific business requirements and are thus not sufficiently fine-grained and relevant in the eyes of regular data users and business experts.

Lack of transparency in results is often a criticism. It is indeed the danger when a “technology first” AI black-box approach is prioritized at the expense of business expertise. Many experts have thus been disenchanted by these tools and forecasts that they can hardly understand, creating issues of accountability and hence an endless war between the proponents of human versus machine approaches.

These tools, which are deployed on a wide scale, are normally designed for general use but fail to cater to the specificities of a particular business and the diversity in its use. For example, a growing business looking to expand its footprint will have a sea of opportunities and will decide on the best possible location in a short space of time with little risk of cannibalization. A player with a large network and existing high-density coverage, however, will be looking to make the optimal choice among a limited number of options for expansion.

Another problem that often emerges is static solutions. In a fluctuating economic environment, it is crucial that the solution is adaptable and flexible to incorporate new rules of the market as they change. New competitors may enter, existing ones may speed up their development or the attractiveness of an area may evolve due to new real-estate (shopping mall) or cultural projects (movie theatres, museums etc.) A solution that fails to factor in new information, such as these structural changes, will soon enough lose all credibility with its users and turn out to be a “sunk cost”.

Since 2006, our team of 280+ cross-disciplinary experts have conducted over 1,000 data science projects and have regularly faced this question, “Where should I open my next store?”, from a variety of sectors including specialized retail businesses, fast-food franchisers, retail banks and insurance companies. Thanks to our experience and understanding of the common challenges and our collaborative approach with business experts, we are able to deliver solutions that are Usable, Useful and Used within a short time frame.

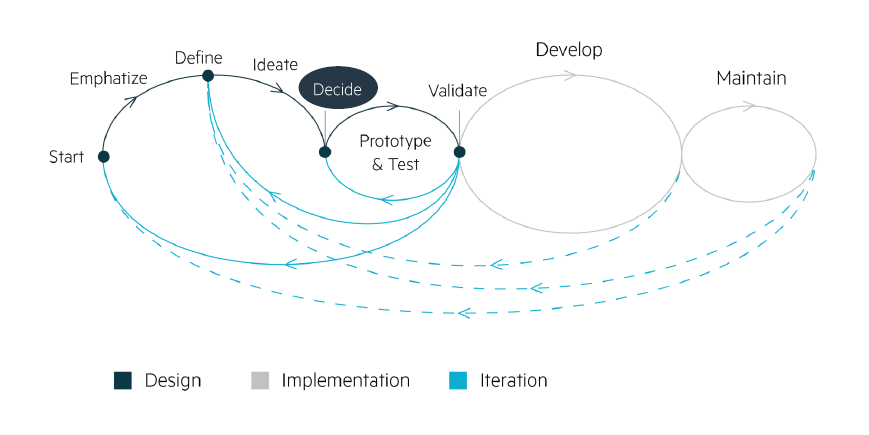

The solution should address a common base of needs, alleviate any tensions or “friction points” in the existing processes and facilitate decision making. These friction points interfere with the implementation of the software and users resort to quick-fix solutions: a recurring example of this is using an external spreadsheet to make up for a need that was not well identified upstream – a common workaround we see our clients using. The success of this type of project therefore lies in the capacity to precisely identify the key business issues and design a solution that perfectly matches the needs of end-users. With this design thinking approach, we involve end-users in the iterative creation process early on, in order to deliver a solution that is fit for purpose. Thanks to this method we are able to rapidly confirm, or not, our working hypotheses and make sure we address all of the issues expressed at each stage of the project.

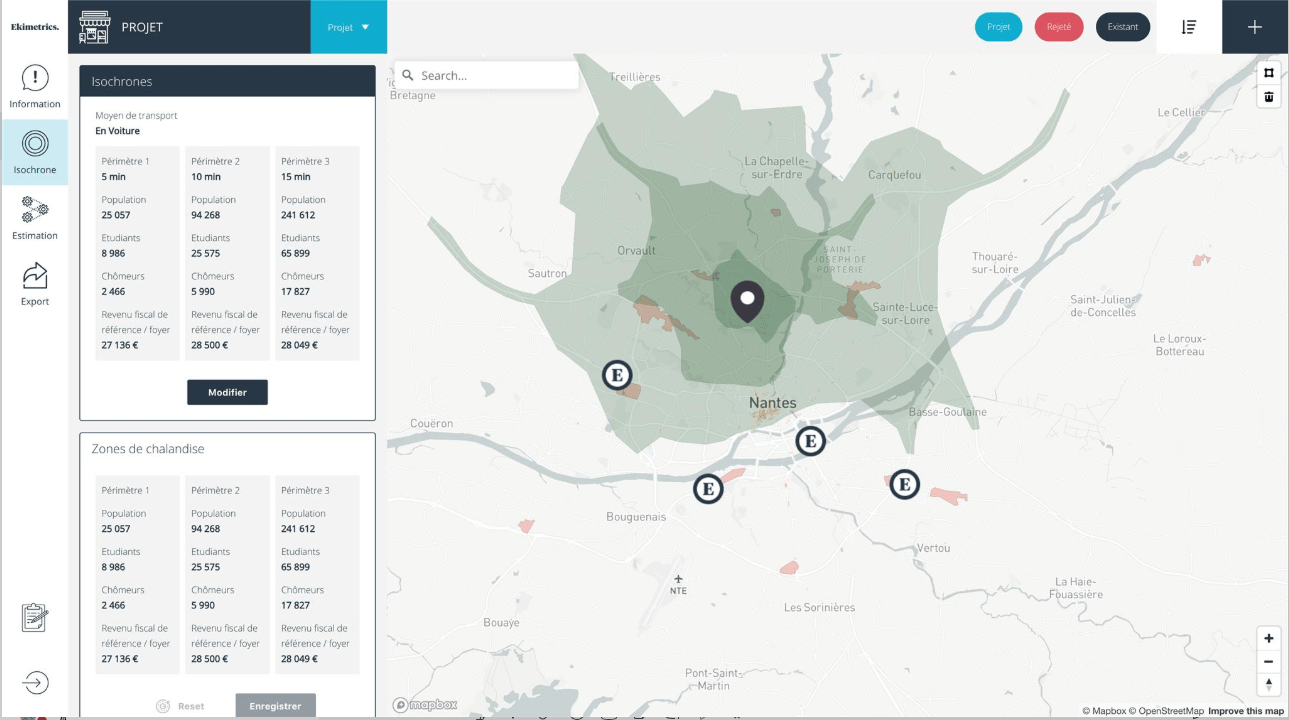

For example, thanks to the early involvement and interview process we discovered that it was necessary for one of our clients to have the ability to automatically generate reports. These documents were used to consolidate the demographic and economic data of the catchment areas defined by the users. Although this case is not in the realm of pure forecasting, catering for this type of features facilitates strong buy-in. The application implemented is no longer just a data tool but rather a decision making aid which makes the life of users easier.

Using prototypes in the form of interactive models makes things more tangible: rethinking a business process based on models enables the users to identify missing information, test the consistency of a template to generate a new object, or enjoy the visibility of a button to confirm an action. Our aim is to focus on implementing the features that add the most value. The ergonomics of a tool are also important for productivity gains for the client. This is even more true when planning the location of sales points, where a mapping application is also needed. The capacity to navigate effortlessly between places, zoom levels and the types of map, must be as simple as possible so that the user may focus on the highest value-added tasks.

This design thinking phase allows us to build the deployment roadmap for the solution and prioritize uses and features. Co-constructing the roadmap with the client helps guarantee that the solution will be implemented optimally. For example, one of our clients didn’t hesitate to de-prioritize the complex visualization of performance indicators to the benefit of developing a new section in line with the announcement of a new home-delivery service. Another example is a retail client who wanted to improve communication between their teams by adding the capability to add comments on the maps of the application – this feature proved quite simple to develop and generated a lot of time-saving in the teams’ daily processes.

In seeking the best algorithm, it may be tempting to emphasize the model’s predictive quality at the expense of its interpretability. We are convinced that combining both factors is essential to building a good model and allows you to create a solution that will be understood and used by business experts. But predictive performance cannot be sacrificed in the aim of generating a virtuous cycle that stems from using the tool.

We are used to operating with modular modelling blocks that dovetail into one another. The central block remains the revenue prediction algorithm which must be as efficient as possible. The methodology chosen in most cases depends on the size of the available network for the learning process and on the number of potential variables as inputs.

We also invest a significant amount of time building an additional layer of modelling: a building block for the interpretability of our prediction model. This enables us to understand what drivers underpin the over/under-performance expected from a store in a given location. Highlighting issues such as:

Answers need to be given to these business questions to confirm the investment choices recommended by the solution, or to rule them out as the case may be.

This layer of interpretability is therefore crucial in many respects: to facilitate understanding of the model, to expedite usage transformation, but also to enable the business teams to contribute to improving the solution through an iterative process. After the initial results have been gathered, these iterations with the business teams allow us to identify the limitations of the model to improve it. For instance, with one of our clients, we discovered that the prediction was less reliable for the points of sale in the most popular tourist cities. Through our interaction with the experts, we came to test new macro-economic variables. At the end of the process, we created a compound variable based on hotel capacity, the number of nearby camping sites and road traffic flows – whereas the previous version of the algorithm included only road traffic flows. This yielded an improvement by two points.

To maximize the impact of a distribution network you must also consider cannibalization effects between sales points. A location with a very strong revenue potential cannot be deemed optimum if it involves significant drops in revenues in neighbouring stores. But how can you be aware of that? This is why we have added a third module to our solution to predict these potential losses and measure the net effect of a new store opening. This is particularly true for players with a high-density territorial coverage such as certain franchise restaurants or in industries such as telecommunications, banking and insurance.

An extensive kick-off process at the start of a project also allows us to better outline the technological choices needed to meet the operational constraints of the users. In the case of a revenue forecasting solution, the response time of the web application is important for testing several locations within a particular area. It allows the user to rapidly compare various store characteristics without any roadblocks in their user experience. We thus emphasized the importance of the trade-off between response time and the cost of the Cloud architecture – which requires using modular blocks for data pre-processing.

Real-time algorithm updates can also be a question of great importance and our approach can vary depending on the context. In some retail sectors, we observed that the business impact of updating the algorithm on a daily basis doesn’t justify the high costs incurred to make it possible. Most of the time we favour relearning of the model on a monthly basis but this may range from 1 to 4 depending on the case. As we see it, this periodicity seems to be the best trade-off between cost and ability to provide enough flexibility to take new structural phenomena into account such as the emergence of a competitor or the launch of new services. A pedagogical approach on the ins and outs of these choices and the client’s involvement in these decisions is key to making constructive progress and maximizing the chances of success.

Some of the projects we have worked that focus on optimizing the performance of our clients’ physical distribution networks have indeed confirmed our initial intuition: don’t throw your weight behind a solution that is exclusively technology-driven! The success of your solution depends on getting the right mix between human aspects (business expertise, usage, decision-making processes, data relevance) and the best practice of data science (modular approach, architectural agility, flow automation, optimized relearning), in order to meet the expectations of users and deliver gains that can be quantified at large scale.

—

> Subscribe to the data science & AI newsletter!